Hello! When I used dba.analyze() with my DBA object linking to bam files, I got errors. My command was as below.

anaScramblecst <- dba.analyze(Scramblecst)

It returned the errors " No matching chromosomes found for file: ..bam" and "Blacklist error: Error in BamFile(file, character(0)): 'file' must be character(1) and not NA"

Then I tried

Scramblecstbl<-dba.blacklist(Scramblecst,blacklist=DBA_BLACKLIST_HG19,greylist=FALSE)

It successfully applied blacklist and removed some intervals. Then I took the filtered file to dba.analyze() again.

anaScramblecstbl <- dba.analyze(Scramblecstbl)

anaScramblecstbl <- dba.analyze(Scramblecstbl,bBlacklist = TRUE)

anaScramblecstbl <- dba.analyze(Scramblecstbl,bBlacklist = FALSE)

Still none of the commands worked. And they gave out the same error "Blacklist error: Error in strsplit(genome, "BSgenome."): non-character argument" and "Unable to apply Blacklist/Greylist."

To figure out what was wrong, I also moved one of my bam files to the sample folder of Diffbind vignette and changed the file name. I run dba() dba.analyze(tamoxifen) and they worked. So I am confused about what the Blacklist errors meant. How can I fix them?

Thank you very much!

Really appreciate your help, Dr. Stark. The setting of

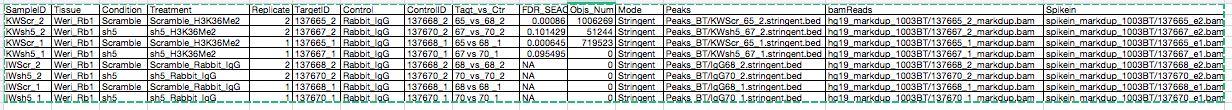

bGreylist=FALSEindeed letdba.analyzework. My data sheet for DBA object had no control reads but spikein bam files. The control reads should be like input reads in ChIP-seq, right? My samples are not from ChIP-seq. My data sheet is like this.After

dba(),dba.count(),dba.normalize(),dba.contrast()anddba.analyze(), it gave a DBA object like this1 KWScr_2 Weri_Rb1 Scramble Scramble_H3K36Me2 2 7694871 0.24

2 KWsh5_2 Weri_Rb1 sh5 sh5_H3K36Me2 2 9184486 0.12

3 KWScr_1 Weri_Rb1 Scramble Scramble_H3K36Me2 1 5194043 0.27

4 KWsh5_1 Weri_Rb1 sh5 sh5_H3K36Me2 1 6161260 0.11

5 IWScr_2 Weri_Rb1 Scramble Scramble_Rabbit_IgG 2 9565498 0.11

6 IWsh5_2 Weri_Rb1 sh5 sh5_Rabbit_IgG 2 11111771 0.10

7 IWScr_1 Weri_Rb1 Scramble Scramble_Rabbit_IgG 1 6471552 0.10

8 IWsh5_1 Weri_Rb1 sh5 sh5_Rabbit_IgG 1 7626725 0.09

Design: [~Treatment] | 2 Contrasts:

1 Treatment Scramble_H3K36Me2 2 Scramble_Rabbit_IgG 2 239615

2 Treatment sh5_H3K36Me2 2 sh5_Rabbit_IgG 2 8349

The .bam files have very long names. So in my last post I changed them into

..bam, which was actuallyxxx.bamin my primary mind. Sorry about the confusion.Now the DBA object can achieve

dba.plotVennwith the contrasts I set, but I still have 3 questions, to make sure what I tested was right.1) In which step was the normalization done? Since the Spikein bam files were included in the data sheet, were they taken into calculation at very early step such as

dba.count()or only atdba.normalize(). If the answer is the latter, which parameters indba.normalize()determine the spikein bam reads will be introduced into the DBA object?2) And if I apply user-supplied normalization factor at the step of

dba.normalize()like thisdoes it mean the spikein bam files from data sheet were automatically discarded in normalization? And I also want to know if my user-supplied numeric vector satisfied the criteria. I think the numbers should be in the same order of my samples in row, right?

3) The third question is actually a follow-up of my old posted question Comment: Error report when loading a 0-byte bed file for DBA object in Diffbind I would like get some advice on it. As in my data sheet, I have some 0-peak bed files. I manually generated them for two reasons. (1) One 0-byte bed file from peak caller got problem to enter my DBA object. (2)I also need some IgG vs IgG peaks (I think they are 0 but the peak caller was unable to generate their bed files) for the convenience of contrast setting and plotVenn.

Thank you very much!

1) To utilize spike-in reads, you need to specify a value for

spikein=when callingdba.normalize(). Ifspikein=TRUE, then the total number of reads in theSpikeinbam file will be used to compute normalization factors. See the help page fordba.normalize().2) If you explicitly specify a vector of normalization factors, this will over-ride the once that would be computed using the spike-in reads. There should be one value per sample, in the order that the samples appear in the sample sheet.

3) I'll look again at the 0-byte bed file for DBA object in DiffBind issue and see if I can suggest something.

Really appreciate your help, Dr. Stark. After confirming the answers, I will apply the right setting to all my samples.